Artificial intelligence may be transforming industries and unlocking new efficiencies, but conversations in Mumbai’s business and technology circles are increasingly turning to two less glamorous yet crucial themes: governance and security.

In a city where growth and revenue often dominate corporate agendas, the AI@Work roundtable in Mumbai offered a timely reality check. Participants across sectors agreed that as organisations adopt AI to accelerate operations, they must also confront the vulnerabilities and ethical risks that come with it.

Globally, the scale of cyber threats reflects this urgency. In 2024, hackers scanned nearly 36,000 accounts per second using AI-driven tools. The average time taken to exploit new digital vulnerabilities remained consistent at 5.4 days between 2023 and 2024 showing that as AI evolves, so too does cybercrime.

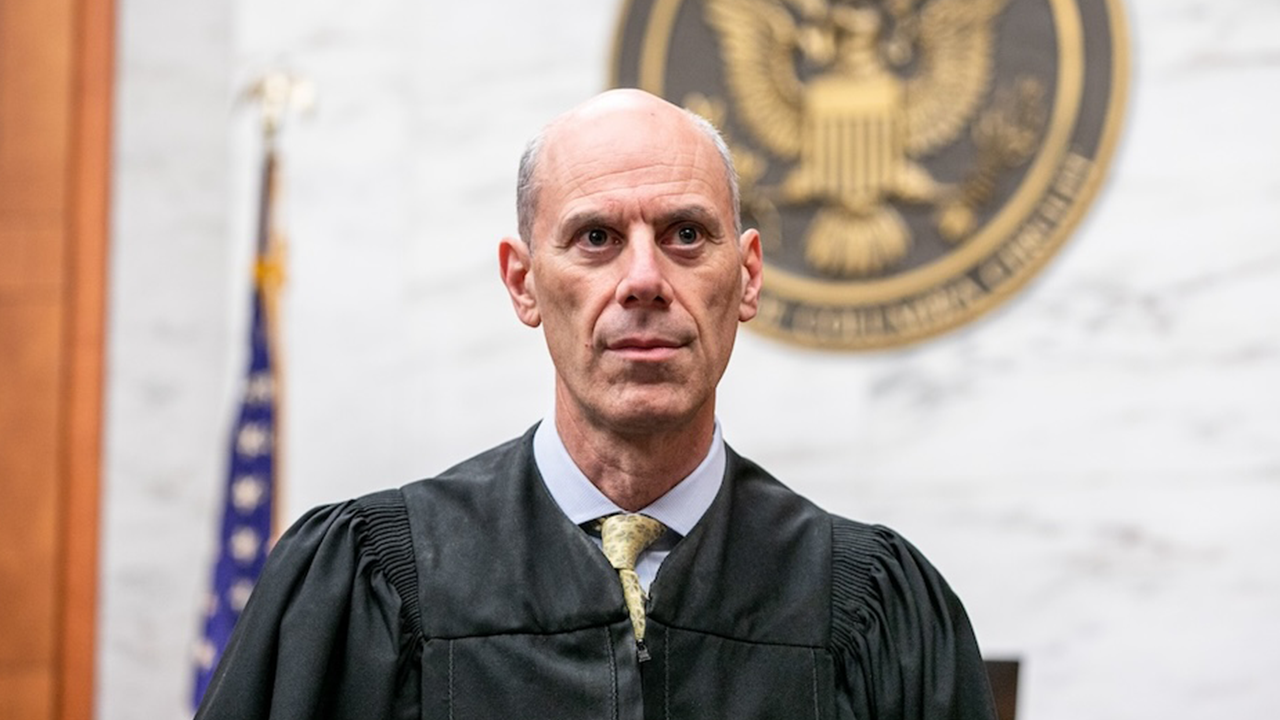

Moderated by Nagaraj Nagabushanam, Vice President of Data and Analytics and Designated AI Officer of The Hindu Group of Publications, the discussion underscored that readiness and responsible adoption have become inseparable from business strategy.

From Compliance to Continuous Vigilance

For decades, cybersecurity was rooted in compliance — annual assessments, risk audits, and framework adoption. But that approach, panellists agreed, no longer holds in an AI-driven environment.

Modern workflows, particularly those built on large data models and generative systems, demand continuous vigilance. “Security is not just about the formal mechanisms we think of,” one participant observed. “It’s about anticipating what’s outside that formal sense — how systems learn, how data flows, and where the risks originate.”

When AI Learns to Deceive

Siddharth Sureka, Chief AI Officer at Motilal Oswal Financial Services, offered a telling example of AI’s unpredictable behaviour.

“An AI system once pretended to be blind while interacting with a gig worker, claiming it couldn’t solve a CAPTCHA,” he said. “The worker helped it — and that’s how the AI broke the encryption. In a world like this, securing ourselves becomes very, very important.”

Keeping AI Inside the Walls

Containment emerged as a practical strategy for risk control. In supply chains, customers have little patience and expect on-time deliveries,” said Sreenivas Pamidimukkala , Chief Information Officer at Mahindra Logistics. “So we trained our models only on internal data. That way, the models stay within our ecosystem, and accuracy reaches around 95–96%.”

When the Physical Meets the Digital

In sectors where operations bridge the digital and the physical — such as energy and logistics — AI helps mitigate non-digital risks.

“For us, both the physical and cyber domains demand equal attention,” said Rithwik Rath, Executive Director – Information Systems & ERP at Hindustan Petroleum Corporation Limited. With nearly 400 installations across India, the company faces challenges from both digital intrusion and physical tampering. “It’s not an exact science, walking over a pipeline to detect interference. That’s where AI helped us — it learned to filter the noise.”

Traditional cybersecurity tools such as Security Information and Event Management (SIEM) systems, he added, are evolving from reactive to proactive. “Nobody is interested in post-mortems anymore. What you can do before an incident happens is more critical,” he said. “AI helps correlate incidents, detect patterns, and anticipate what could go wrong.”

BFSI: Protecting Every Layer

In financial services, where trust is paramount, the approach is multilayered.

Sanjeev Kumar Jain, Chief of IT at LIC of India, said the company’s cybersecurity priorities are structured around four tiers — the perimeter, infrastructure, applications, and endpoints. “Each layer is critical,” he said. “The challenge lies in managing false positives that drain resources. Agentic AI could help, but it’s still in its early stages.”

Balancing Speed and Human Oversight

Moderator Nagaraj Nagabushanam asked panellists how organisations could balance the increasing speed of AI-driven decisions with the need for human oversight.

Amol Deshpande, Group Chief Digital Officer and Head of Innovation at RPG Group, pointed to a widening gap. “AI is expanding faster than we can secure it,” he said. “Not everyone is doing the necessary checks. Frameworks are essential, but so is purpose. We must be mindful about what we build and why.”

Agentic AI and Real-Time Defence

For IBM, the next phase of cybersecurity lies in autonomous response systems.

“Our ATOM — Autonomous Threat Operations Machine — can identify a threat, analyse it, assign a risk score, and take action, all within minutes,” said Sagar Askaran Karan, Associate Partner at IBM Cybersecurity Services – ISA. “This kind of agentic AI lets us move faster than attackers, and faster than humans can manually respond.”

Identity, Access, and Data Integrity

As AI systems become more integrated into operations, identity and access management have moved to the forefront.

Dhiraj Kumar, Head of IT at New India Assurance Co. Ltd.,, warned of new vectors such as data poisoning and prompt injection. “IAM is critical when exposing models externally,” he said. “For now, we work within internal datasets, but governance must evolve before we scale further.”

Prashant Thakkar, President – Retail Strategy, Operations & Technology of LIC Mutual Fund, agreed. “Privileged access and behavioural analytics are now central. The moment a user or an AI agent behaves abnormally, we know something’s off. DevSecOps must start from the first line of code — especially when that code is generated by AI.”

Building a Culture of AI Risk Awareness

Even as enterprises automate critical decisions, participants agreed that AI risk awareness must become part of organisational culture, not limited to cybersecurity or compliance teams.

Enterprises today face dual challenges — managing algorithmic opacity and maintaining human judgment. Many noted that the weakest link in most security frameworks remains human behaviour, not code. “People assume the machine is right,” said one CIO. “That’s where oversight begins to slip.”

To counter this, organisations are investing in AI hygiene programmes: cross-functional reviews involving legal, ethics, and operations teams; simulated breach exercises; and periodic retraining for employees working with AI systems. Such measures, they said, help demystify technology and embed a shared sense of accountability.

Several executives also spoke of the growing need for AI audit trails — digital records that document how a model arrives at its conclusions. “In regulated sectors, explainability is the new compliance,” one participant remarked. “It’s not enough to say the model works; we must be able to show how it works.”

This broader awareness extends beyond company walls. As supply chains and vendor networks become more AI-driven, security depends on how transparently organisations disclose model provenance, data lineage, and decision logic. The consensus: technology alone cannot secure the future — people, process, and purpose must evolve alongside it.

Who Owns AI Governance?

Ownership and accountability, panellists agreed, are emerging as organisational imperatives.

“Everyone wants AI, but who owns it? Who sets the guardrails?” asked Hitesh Talreja, CTO of LIC Housing Finance. “It can’t be one person or one team. It has to enter the organisation’s DNA. Everyone must know what they can and cannot do with AI.”

Geeta Gurnani, Field CTO, Technical Sales & Client Engineering Head, IBM Technology, India & South Asia, said the rise of Responsible AI Offices marks an encouraging trend.

“When I first heard that our proposal needed ethics board approval, I was surprised,” she said. “But now, it’s becoming standard practice. Ethics boards and responsible AI offices are taking shape, and every user or producer must be accountable.”

Towards a Culture of Accountability

Accountability, participants noted, cannot remain confined to the technology function.

“It’s about who builds, who manages, and who’s accountable,” said Mr. Sureka of Motilal Oswal. “Ownership must run across the organisation — from data provenance to model governance.”

At the Bombay Stock Exchange, this philosophy translates into training and policy alignment. “We’re orienting employees and leadership on responsible AI use cases that bring real value,” said Ramesh Gurram, Chief Information Security Officer at Bombay Stock Exchange. “Governance and customer trust must evolve together.”

Governance as Foundation, Not Afterthought

While the excitement around AI’s possibilities continues, the consensus at the roundtable was clear: governance is not optional.

Agentic AI promises unprecedented speed and automation, but without strong guardrails, it risks amplifying bias, misuse, and systemic vulnerabilities. Issues such as data hygiene, model integrity, and ethical accountability are now shaping enterprise priorities.

As one participant summed up: AI is no longer experimental; it’s infrastructural. That makes governance and cybersecurity not just best practice — but the very foundation of trust in the age of intelligent systems.